I

f you’re a Linux user, you’re probably familiar with the command-line interface and the Bash shell. What you may not know is that there are a wide variety of Bash utilities that can help you work more efficiently and productively on the Linux platform. Whether you’re a developer, system administrator, or just a curious user, learning how to use these utilities can help you take your Linux experience to the next level.

In this article, we’ll explore 10 of the most powerful Bash utilities and show you how they can be used to search for text, process structured data, modify files, locate files or directories, and synchronize data between different locations. So, if you’re ready to enhance your Linux experience, let’s dive in and discover the power of Bash utilities.

10 Bash utilities to enhance your Linux experience

These utilities can help you do everything from managing processes to editing files, and they’re all available right from the command line.

1. grep

If you’ve ever needed to search for a specific string of text in a file or output, you’ve likely used grep. This command-line utility searches for a specified pattern in a given file or output and returns any matching lines. It’s an incredibly versatile tool that can be used for everything from debugging code to analyzing log files.

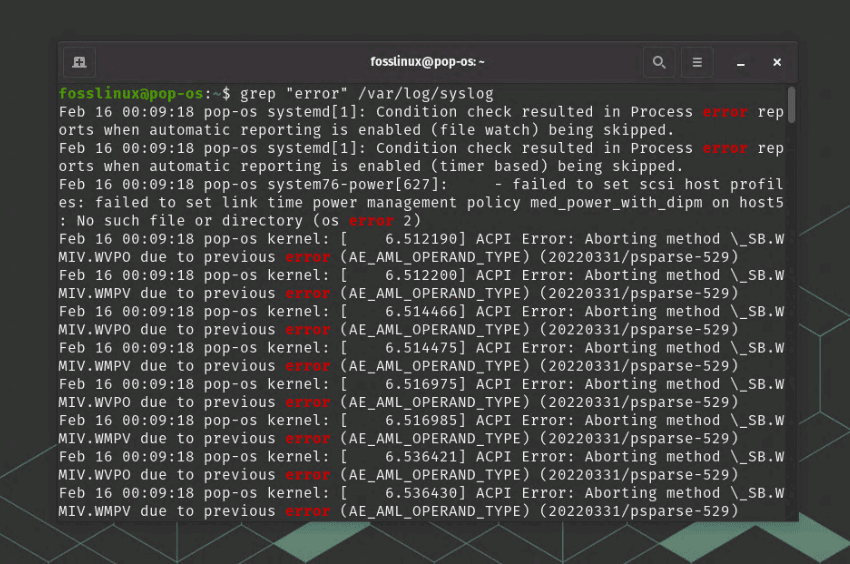

Here’s a simple example of how to use grep:

grep "error" /var/log/syslog

grep command to highlight error in the log file

This command will search the syslog file for any lines that contain the word “error.” You can modify the search pattern to match specific strings, regular expressions, or other patterns. You can also use the “-i” option to make the search case-insensitive, or the “-v” option to exclude matching lines.

2. awk

Awk is a powerful utility that can be used to process and manipulate text data. It’s particularly useful for working with delimited data, such as CSV files. Awk allows you to define patterns and actions that are applied to each line of input data, making it an incredibly flexible tool for data processing and analysis.

Here’s an example of how to use awk to extract data from a CSV file:

awk -F ',' '{print $1,$3}' some_name.csv

This command sets the field separator to “,” and then prints the first and third fields of each line in the data.csv file. You can use awk to perform more complex operations, such as calculating totals, filtering data, and joining multiple files.

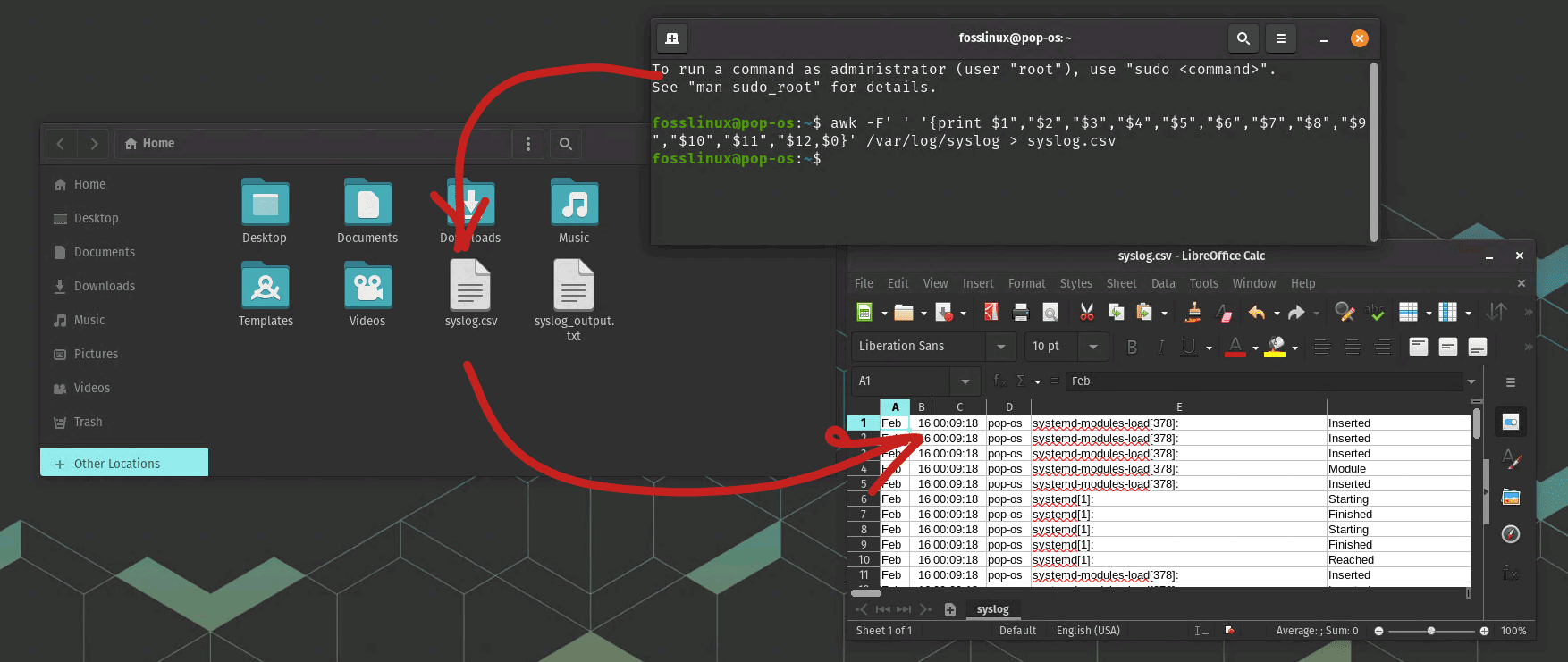

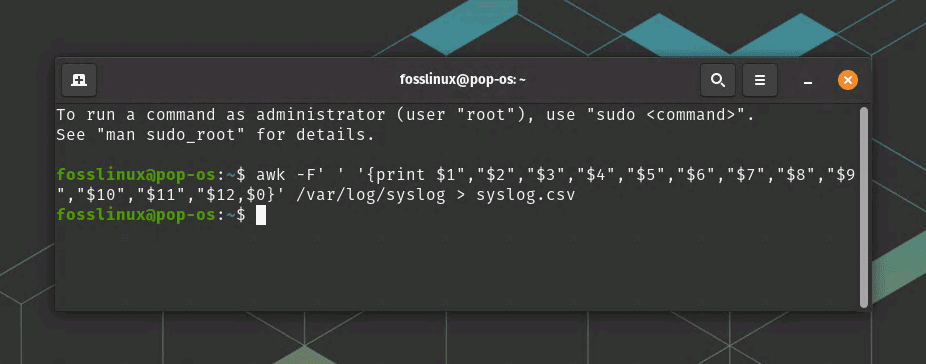

For example, let’s export the /var/log/syslog file to the syslog.csv file. The below command show work. The syslog.csv should get saved in the “Home” directory.

awk -F' ' '{print $1","$2","$3","$4","$5","$6","$7","$8","$9","$10","$11","$12,$0}' /var/log/syslog > syslog.csv

Export syslog to csv file command

This command sets the field separator to a space using the -F flag and uses the print command to output the fields separated by commas. The $0 at the end of the command prints the entire line (message field) and includes it in the CSV file. Finally, the output is redirected to a CSV file called syslog.csv.

3. sed

Sed is a stream editor that can be used to transform text data. It’s particularly useful for making substitutions in files or output. You can use sed to perform search and replace operations, delete lines that match a pattern, or insert new lines into a file.

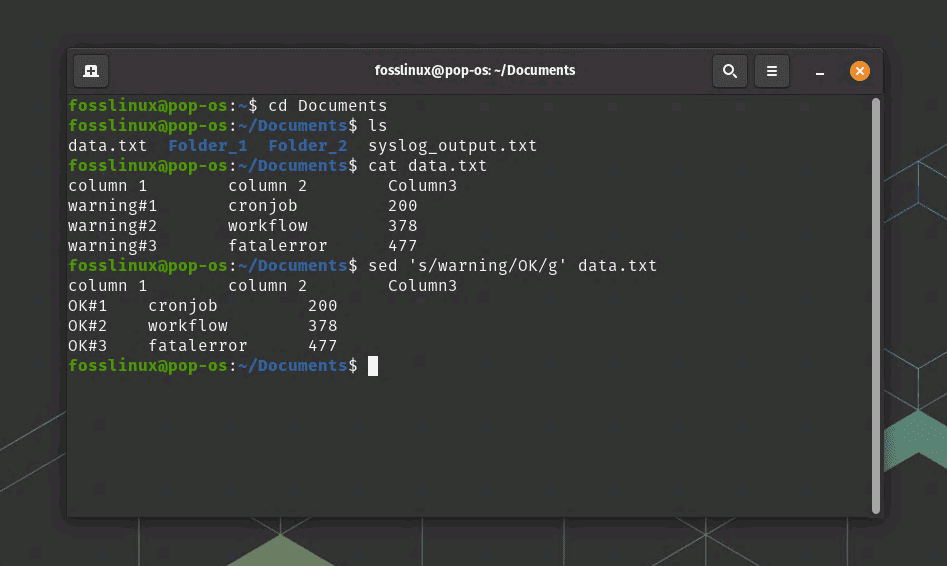

Here’s an example of how to use sed to replace a string in a file:

sed 's/warning/OK/g' data.txt

sed command usage to transform data

This command will replace all occurrences of “warning” with “OK” in the data.txt file. You can use regular expressions with sed to perform more complex substitutions, such as replacing a pattern that spans multiple lines. In the above screenshot, I used cat command to display the content of the data.txt before using the sed command.

4. find

The find utility is a powerful tool for searching for files and directories based on various criteria. You can use find to search for files based on their name, size, modification time, or other attributes. You can also use find to execute a command on each file that matches the search criteria.

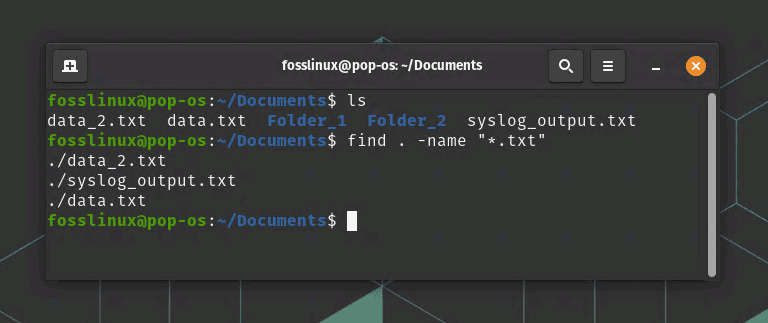

Here’s an example of how to use find to search for all files with a .txt extension in the current directory:

find . -name "*.txt"

find command usage

This command will search the current directory and all its subdirectories for files with a .txt extension. You can use other options with find to refine your search, such as “-size” to search for files based on their size, or “-mtime” to search for files based on their modification time.

5. xargs

Xargs is a utility that allows you to execute a command on each line of input data. It’s particularly useful when you need to perform the same operation on multiple files or when the input data is too large to be passed as arguments on the command line. Xargs reads input data from standard input and then executes a specified command on each line of input.

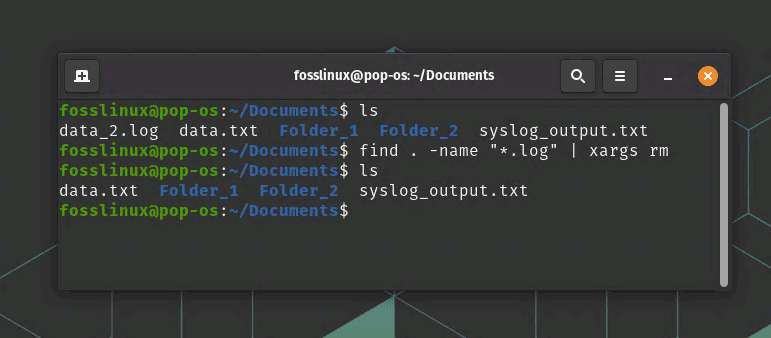

Here’s an example of how to use xargs to delete all files in a directory that have a .log extension:

find . -name "*.log" | xargs rm

find and delete file using a condition

This command first searches for all files in the current directory and its subdirectories that have a .log extension. It then pipes the list of files to xargs, which executes the rm command on each file. In the above screenshot, you can see the data_2.log before running the command. It was deleted after running the rm command.

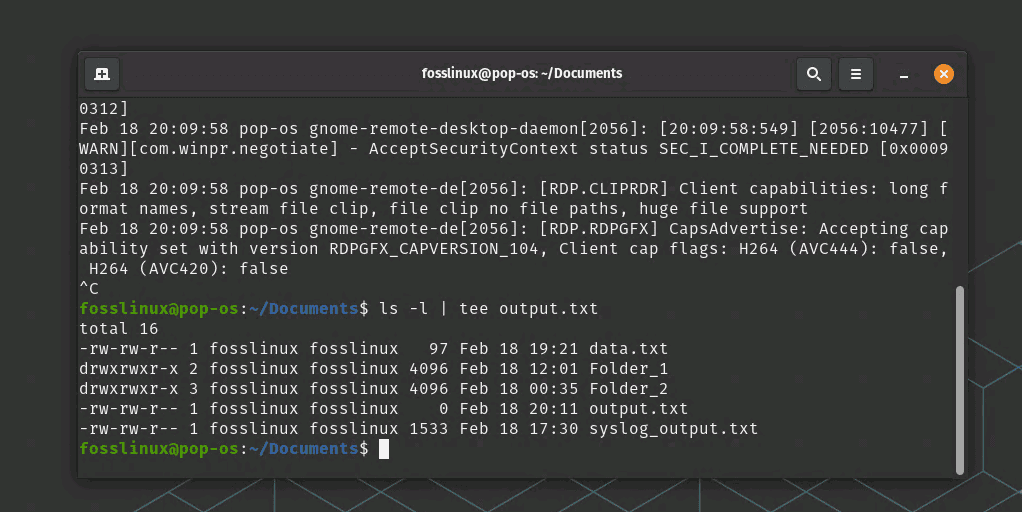

6. tee

The tee utility allows you to redirect the output of a command to both a file and standard output. This is useful when you need to save the output of a command to a file while still seeing the output on the screen.

Here’s an example of how to use tee to save the output of a command to a file:

ls -l | tee output.txt

tee output command usage

This command lists the files in the current directory and then pipes the output to tee. Tee writes the output to the screen and to the output.txt file.

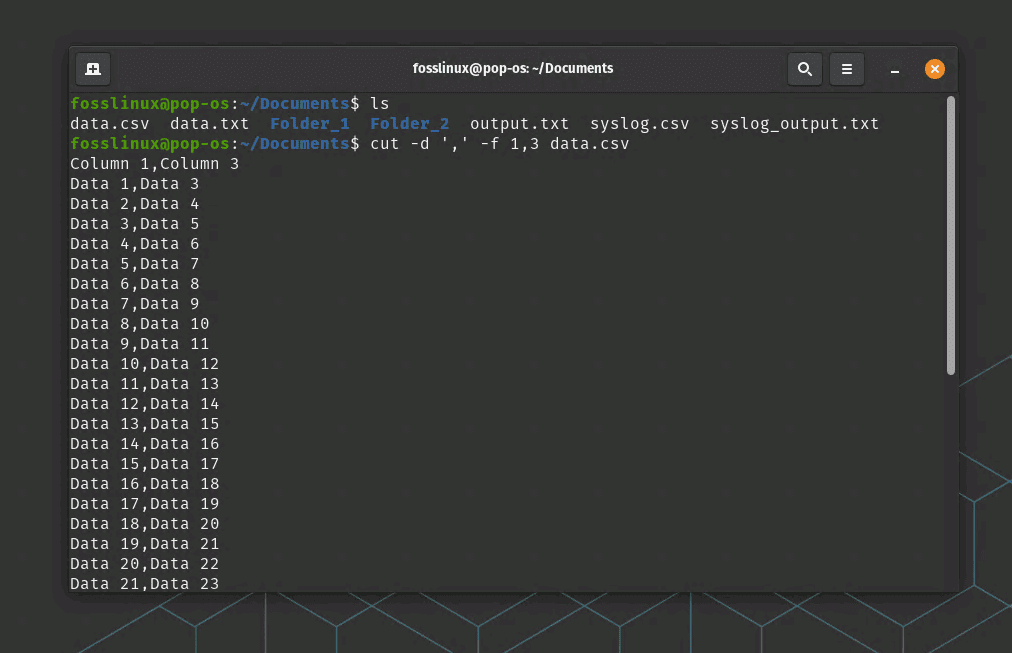

7. cut

The cut utility allows you to extract specific fields from a line of input data. It’s particularly useful for working with delimited data, such as CSV files. Cut allows you to specify the field delimiter and the field numbers that you want to extract.

Here’s an example of how to use cut to extract the first and third fields from a CSV file:

cut -d ',' -f 1,3 data.csv

Cut command usage

This command sets the field delimiter to “,” and then extracts the first and third fields from each line in the syslog.csv file.

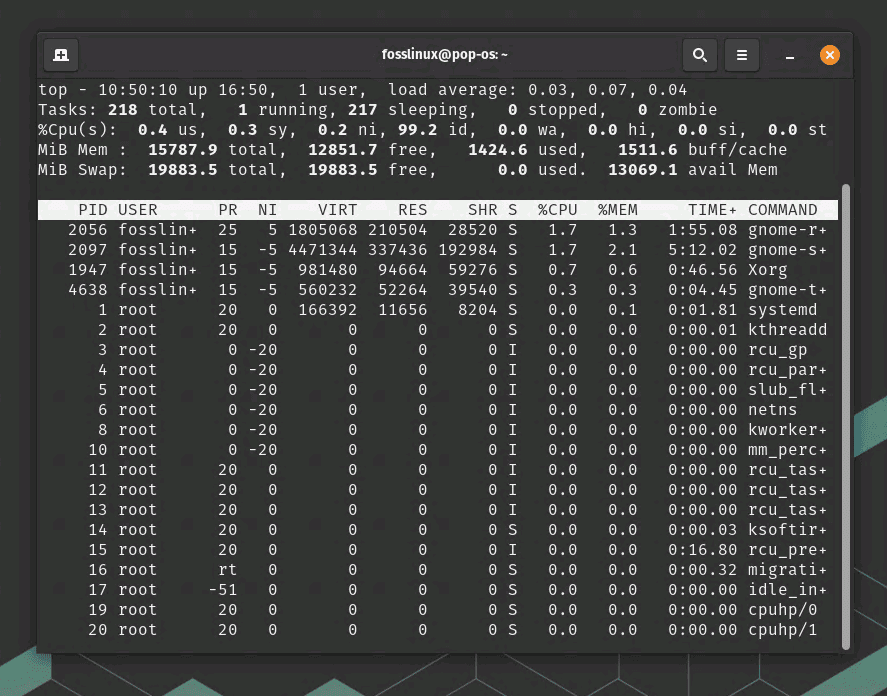

8. top

The top utility displays real-time information about the processes running on your system. It shows the processes that are currently using the most system resources, such as CPU and memory. Top is a useful tool for monitoring system performance and identifying processes that may be causing problems.

Here’s an example of how to use top to monitor system performance:

top

top command usage

This command displays a list of the processes that are currently using the most system resources. You can use the arrow keys to navigate the list and the “q” key to exit top.

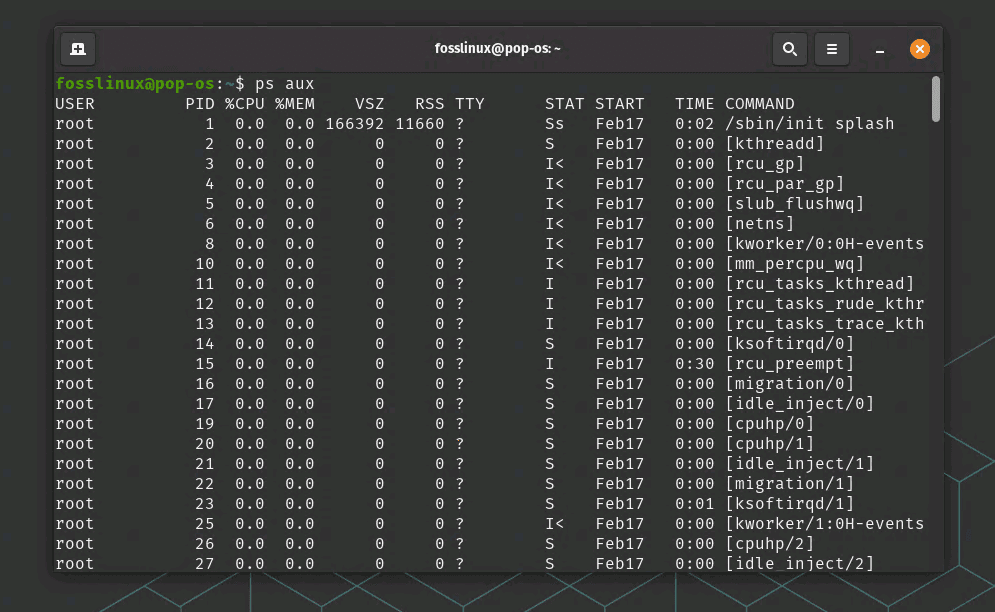

9. ps

The ps utility displays information about the processes running on your system. It shows the process ID, the parent process ID, the user that started the process, and other information. You can use ps to view a snapshot of the current state of the system or to monitor specific processes over time.

Here’s an example of how to use ps to view the processes running on your system:

ps aux

ps aux command usage

This command displays a list of all processes running on the system, along with their process ID, user, and other information. You can use other options with ps to filter the list of processes based on specific criteria, such as the process name or the amount of memory used.

10. rsync

Rsync is a powerful utility that enables you to synchronize files and directories between different locations. It is particularly useful for backing up files or for transferring files between different servers or devices. For example, the following command synchronizes the contents of the local /home directory with a remote server:

rsync -avz /home user@remote:/backup

Conclusion

Bash utilities are a powerful set of tools that can help enhance your Linux experience. By learning how to use utilities like grep, awk, sed, find, and rsync, you can quickly and efficiently search for text, process structured data, modify files, locate files or directories, and synchronize data between different locations. With these utilities at your disposal, you can save time, boost your productivity, and improve your workflow on the Linux platform. So, whether you are a developer, system administrator, or just a curious user, taking the time to learn and master Bash utilities will be a valuable investment in your Linux journey.