With the growing number of AI-based developers, Clear Linux Project shifts its focus towards Deep Learning as it releases Deep Learning Reference Stack 4.0.

The brains behind Clear Linux Project, namely Intel, acknowledges the significance of Artificial Intelligence and how rapidly it has been evolving as of late. Accordingly, the company vows to accelerate enterprise and ecosystem development to take DL (Deep Learning) workloads to the next level. As a part of this mission, Intel introduced an integrated Deep Learning Reference Stack, whose new version arrived earlier this week.

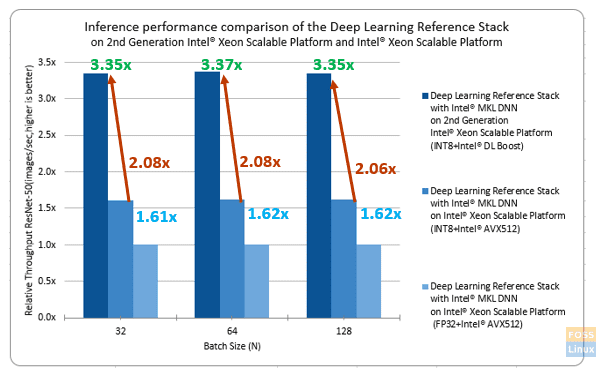

This stack is mainly aimed at the Deep Learning facet of Artificial Intelligence and performs well on the Intel® Xeon® Scalable series of processors.

As part of this update, the developers have focused on customer feedback and enhancing the user experience. Thus, users are to find this stack to be more stable than the previous releases.

Now that we’ve briefly introduced the Deep Learning Reference Stack let’s see what its new version has to offer.

What’s New

With this update, AI-based developers will be able to customize solutions better, face fewer complexities, and quickly prototype and deploy Deep Learning workloads. All of this would be made possible with several new additions, such as TensorFlow 4.14, which is the hub of all Machine Learning activities. With the help of this platform, both researchers and machine learning practitioners will be assisted in either creating solutions or developing software based on Machine Learning.

Other than that, Deep Learning Reference Stack 4.0 also comes with Intel® OpenVINO™ model server version 2019_R1.1. With this addition, individuals working with neural networks will be able to utilize their Intel processors better.

Another such feature is Intel Deep Learning Boost (DL Boost) with AVX-512 Vector Neural Network Instruction (Intel AVX-512 VNNI) that will accelerate your algorithms based on deep neural networks. Lastly, users will also find Deep Learning Compilers (TVM* 0.6) with this release.

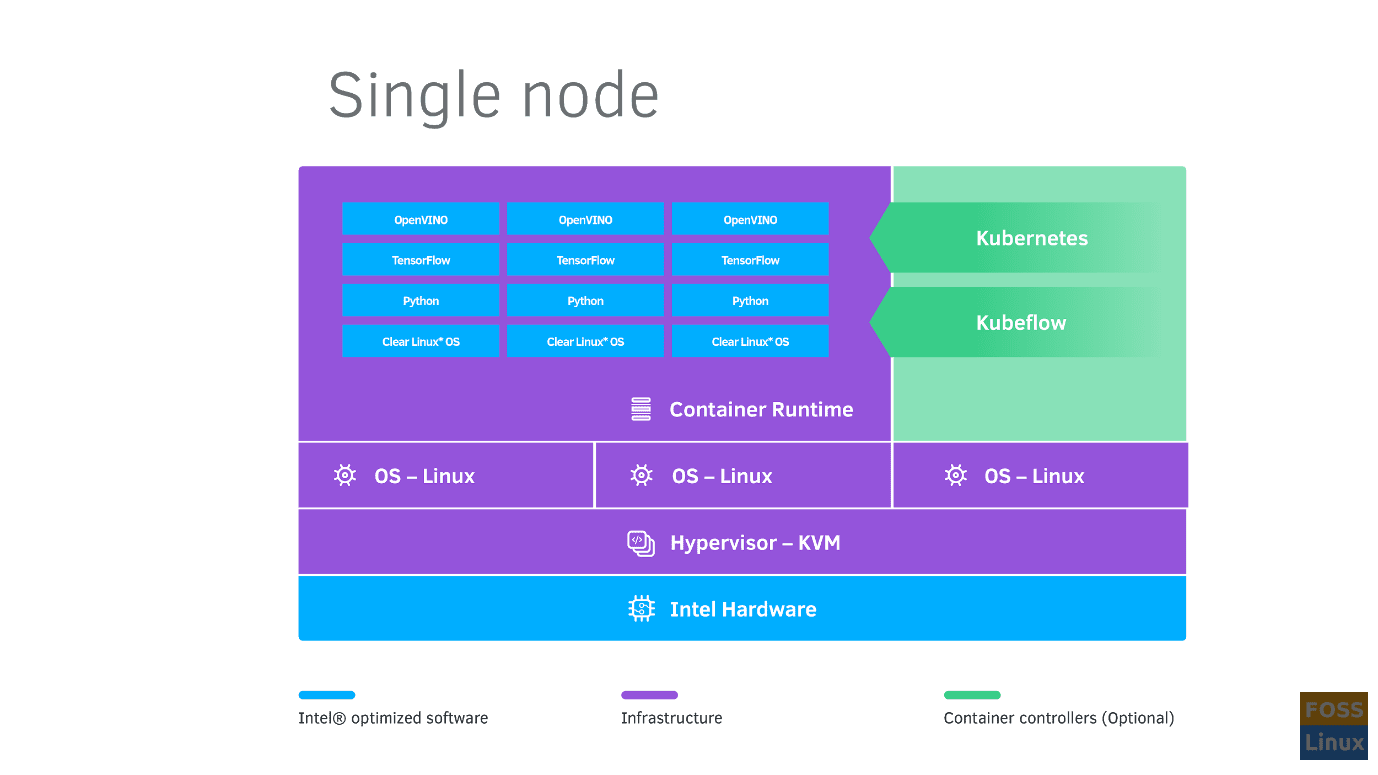

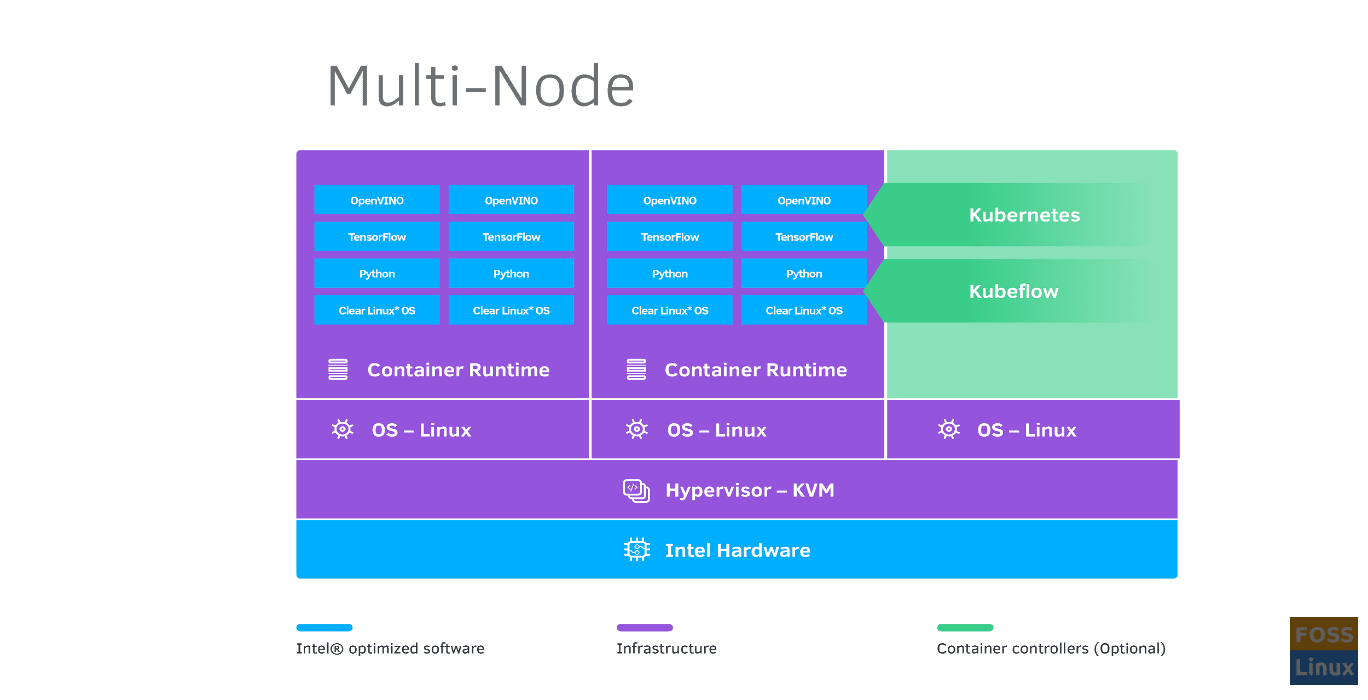

It is also worth mentioning that the Deep Learning Reference Stack supports both single and multi-node architecture. Have a look at the screenshots below to see how it works.

Apart from the additions mentioned above, the new Reference Stack also offers updated developer frameworks and tools. As the stack is a part of the Clear Linux Project, it will come with the Clear Linux operating system. The catch is that Deep Learning uses cases have been tested on this version.

Plus, the stack includes Kubernetes for dealing with containerized applications for multi-node clusters while taking into consideration the Intel platform. In terms of the containers and libraries, Docker Containers and Kata Containers (with Intel® VT Technology) and Intel® Math Kernel Library for Deep Neural Networks (MKL DNN) are included in this stack.

As this is a deep learning stack, it comes with Python application and service execution support. Also, the stack uses Kubeflow Seldon for deployment and Jupyter Hub for user experience.

Ultimately, it should be mentioned that the Deep Learning Reference Stack has been primarily designed keeping in mind the Intel architecture. Hence, users are to make the most out of their processors with the help of this stack that can be seen in the chart below.

Conclusion

Deep Learning Reference Stack could be beneficial for AI-based developers who also happen to own an Intel processor. And, with this update, users are to get an even better experience developing intelligent applications. Interested to know more about this release? Click here.