Linux Containers have been around for some time but became widely available when introduced in the Linux kernel in 2008. Containers are lightweight, executable application components that combine app source code with OS libraries and dependencies required to run the code in any environment. In addition, they offer application packaging and delivery technologies while taking advantage of application isolation with the flexibility of image-based deployment methods.

Linux Containers use control groups for resource management, namespaces for system process isolation, SELinux Security to enable secure tenancy and reduce security threats or exploits. These technologies provide an environment to produce, run, manage and orchestrate containers.

The article is an introductory guide to the main elements of Linux container architecture, how containers compare with KVM virtualization, image-based containers, docker containers, and containers orchestration tools.

Container architecture

A Linux container utilizes key Linux kernel elements such as cgroups, SELinux, and namespaces. Namespaces ensure system process isolation while cgroups (control groups), as the name suggests, are used to control Linux system resources. SELinux is used to assure separation between the host and containers and between individual containers. You can employ SELinux to enable secure multi-tenancy and reduce the potential for security threats and exploits. After the kernel, we have the management interface that interacts with other components to develop, manage, and orchestrate containers.

SELinux

Security is a critical component of any Linux system or architecture. SELinux should be the first line of defense for a secure container environment. SELinux is a security architecture for Linux systems that gives sysadmins more control over access to your container’s architecture. You can isolate the host system containers and other containers from each other.

A reliable container environment requires a sysadmin to create tailored security policies. Linux systems provide different tools like podman or udica for generating SELinux container policies. Some container policies control how containers access host resources such as storage drives, devices, and network tools. Such a policy will harden your container environment against security threats and create an environment that maintains regulatory compliance.

The architecture creates a secure separation that prevents root processes within the container from interfering with other services running outside a container. For example, a system automatically assigns a Docker container an SELinux context specified in the SELinux policy. As a result, SELinux always appears to be disabled inside a container even though it’s running in enforcing mode on the host operating system or system.

Note: Disabling or running SELinux in permissive mode on a host machine will not securely separate containers.

Namespaces

Kernel namespaces provide process isolation for Linux containers. They enable the creation of abstraction of system resources where each appears as a separate instance to processes within a namespace. In essence, containers can use system resources simultaneously without creating conflict. Namespaces include network, mount, UTS namespaces, IPC namespaces, PID namespaces.

- Mount namespaces isolate file system mount points available for a group of processes. Other services in a different mount namespace can have alternate views of the file system hierarchy. For example, each container in your environment can have its own /var directory.

- UTS namespaces: isolate the node name and domain name system identifiers. It allows each container to have a unique hostname and NIS domain name.

- Network namespaces create isolation of network controllers, firewalls, and routing IP tables. In essence, you can design a container environment to use separate virtual network stacks with virtual or physical devices and even assign them unique IP addresses or iptable rules.

- PID namespaces allow system processes in different containers to use the same PID. In essence, each container can have a unique init process to manage the container’s life cycle or initialize system tasks. Each container will have its own unique /proc directory to monitor processes running within the container. Note that a container is only aware of its processes/services and cannot see other processes running in different parts of the Linux system. However, a host operating system is aware of processes running inside a container.

- IPC namespaces – isolate system interprocess communication resources (System V, IPC objects, POSIX message queues) to allow different containers to create shared memory segments with the same name. However, they cannot interact with other containers’ memory segments or shared memory.

- User namespaces – allows a sysadmin to specify host UIDs dedicated to a container. For example, a system process can have root privileges inside a container but similarly be unprivileged for operations outside the container.

Control groups

Kernel cgroups enable system resource management between different groups of processes. Cgroups allocate CPU time, network bandwidth, or system memory among user-defined tasks.

Containers VS KVM virtualization

Both containers and KVM virtualization technologies have advantages and disadvantages that guide the use-case or environment to deploy. For starters, KVM virtual machines require a kernel of their own while containers share the host kernel. Thus, one key advantage of containers is launching more containers than virtual machines using the same hardware resources.

Linux containers

| Advantages | Disadvantages |

|---|---|

| Designed to manage isolation of containerized applications. | Container isolation is not at the same level as KVM virtualization. |

| System-wide host configurations or changes are visible in each container. | Increased complexity in managing containers. |

| Containers are lightweight and offer faster scalability of your architecture. | Requires extensive sysadmin skills in managing logs, persistent data with the right read and write permission. |

| It enables quick creation and distribution of applications. | |

| It facilitates lower storage and operational cost in regards to container image development and procurement. |

Areas of applications:

- Application architecture that requires to scale extensively.

- Microservice architecture.

- Local application development.

KVM virtualization

| Advantages | Disadvantages |

|---|---|

| KVM enables full boot of operating systems like Linux, Unix, macOS, and Windows. | Requires extensive administration of the entire virtual environment |

| A guest virtual machine is isolated from the host changes and system configurations. You can run different versions of an application on the host and virtual machine. | It can take longer to set up a new virtual environment, even with automation tools. |

| Running separate kernels provides better security and separation. | Higher operational costs associated with the virtual machine, administration, and application development |

| Clear allocation of resources. |

Areas of application:

- Systems environments that require clear dedication resources.

- Systems that require an independent running kernel.

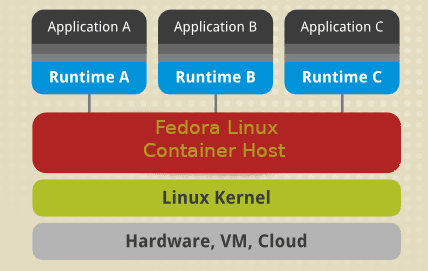

Image-based container

Image-based containers package applications with individual run time stacks, making provisioned containers independent of the host operating system. In essence, you can run several instances of an application, each on a different platform. To make such an architecture possible, you have to deploy and run the container and application run time as an image.

Image-based container

A system architecture made of image-based containers allows you to host multiple instances of an application with minimal overhead and flexibility. It enables the portability of containers that are not dependent on host-specific configurations. Images can exist without containers. However, a container needs to run an Image to exist. In essence, containers are dependent on Images to create a run time environment to run an application.

Container

A container is created based on an image that holds necessary configuration data to create an active component that runs as an application. Launching a container creates a writable layer on top of the specified image to store configuration changes.

Image

An image is a static snapshot of a containers’ configuration data at a specific time. It is a read-only layer where you can define all configuration changes in the top-most writable layer. You can save it only by creating a new image. Each image depends on one or more parent images.

Platform-image

A platform image has no parent. Instead, you can use it to define the runtime environment, packages, and utilities necessary for a containerized application to launch and run. For example, to work with Docker containers, you pull a read-only platform image. Any changes defined reflect in the copied images stacked on top of the initial Docker Image. Next, it creates an application layer that contains added libraries and dependencies for the containerized application.

A container can be very large or small depending on the number of packages and dependencies included in the application layer. Moreover, further layering of the image is possible with independent 3rd party software and dependencies. Thus, from an operational point of view, there can be many layers behind an Image. However, the layers only appear as one container to a user.

Docker containers

Docker is a containerized virtual environment to develop, maintain, deploy, and orchestrate applications and services. Docker containers offer less overhead in configuring or setting up virtual environments. The containers do not have a separate kernel and run directly from the host operating system. It utilizes namespaces and control groups to use the host OS resources efficiently.

Docker image

An instance of a container runs one process in isolation without affecting other applications. In essence, each containerized app has unique configuration files.

A Docker demon allows containers to ping back and allocates resources to a containerized app depending on how much it needs to run. In contrast to a Linux container (LXC), a docker container specializes in deploying single containerized applications. It runs natively on Linux but also supports other operating systems like macOS and Windows.

Key benefits of docker containers

- Portability: – You can deploy a containerized app in any other system where a Docker Engine is running, and your application will perform exactly as when you tested it in your development environment. As a developer, you can confidently share a docker app without having to install additional packages or software regardless of the operating system your teams are using. Docker goes hand in hand with versioning, and you can share containerized applications easily without breaking the code.

- Containers can run anywhere and on any supported OS like Windows, VMs, macOS, Linux, On-prem, and in Public Cloud. The widespread popularity of Docker images has led to extensive adoption by cloud providers such as Amazon Web Services (AWS), Google Compute Platform (GCP), and Microsoft Azure.

- Performance: – Containers do not contain an operating system which creates a much smaller footprint than virtual machines and are generally faster to create and start.

- Agility: – The performance and portability of containers enable a team to create an agile development process that improves continuous integration and continuous delivery (CI/CD) strategies to deliver the right software at the right time.

- Isolation: – A Docker container with an application also includes the relevant versions of any dependencies and software that your application requires. Docker containers are independent of one another, and other containers/applications that require different versions of the specified software dependencies can exist in the same architecture without a problem. For example, it ensures that an application like Docker MariaDB only uses its resources to maintain consistent system performance.

- Scalability: – Docker allows you to create new containers and applications on demand.

- Collaboration: – The process of containerization in Docker allows you to segment an application development process. It allows developers to share, collaborate, and solve any potential issues quickly with no massive overhaul needed creating a cost-effective and time-saving development process.

Container orchestration

Container orchestration is the process of automating deployment, provisioning, management, scaling, security, lifecycle, load balancing, and networking of containerized services and workloads. The main benefit of orchestration is automation. Orchestration supports a DevOps or agile development process that allows teams to develop and deploy in iterative cycles and release new features faster. Popular orchestration tools include Kubernetes, Amazon ECR Docker Swarm, and Apache Mesos.

Container orchestration essentially involves a three-step process where a developer writes a (YAML or JSON) configuration file that defines a configuration state. The orchestration tool then runs the file to achieve the desired system state. The YAML or JSON file typically defines the following components:

- The container images that make up an application and the image registry.

- It provisions a container with resources like storage.

- Third, it defines network configurations between containers.

- It specifies image versioning.

Orchestration tool schedules deployment of the containers or container replicas to host based on available CPU capacity, memory, or other constraints specified in the configuration file. Once you deploy containers, the orchestration tool manages the lifecycle of an app based on a container definition file (Dockerfile). For example, you can use a Dockerfile to manage the following aspects:

- Manage up or down scalability, resource allocation, load balancing.

- Maintain availability and performance of containers in the event of an outage or shortage of system resources.

- Collect and store log data to monitor the health and performance of containerized applications.

Kubernetes

Kubernetes is one of the most popular container orchestration platforms used to define the architecture and operations of cloud-native applications so that developers can focus on product development, coding, and innovation. Kubernetes allows you to build applications that span multiple containers, schedule them across a cluster, scale them, and manage their health and performance over time. In essence, it eliminates the manual processes involved in deploying and scaling containerized applications.

Key components of Kubernetes

- Cluster: A control plane with one or more computing machines/nodes.

- Control plane: A collection of processes that controls different nodes.

- Kubelet: It runs on nodes and ensures containers can start and run effectively.

- Pod: A group of containers deployed to a single node. All containers in a pod share an IP address, hostname, IPC, and other resources.

Kubernetes has become the industry standard in container orchestration. It provides extensive container capabilities, features a dynamic contributor community, is highly extensible and portable. You can run it in a wide range of environments like on-prem, public, or cloud and effectively use it with other container technologies.

Wrapping up

Containers are lightweight, executable application components consisting of source code, OS libraries, and dependencies required to run the code in any environment. Containers became widely available in 2013 when the Docker platform was created. As a result, you will often find users in the Linux community using Docker containers and containers interchangeably to refer to the same thing.

There are several advantages to using Docker containers. However, not all applications are suitable for running in containers. As a general rule of thumb, applications with a graphical user interface are not suitable for use with Docker. Therefore, Containerized microservices or serverless architectures are essential for cloud-native applications.

The article has given you an introductory guide to containers in Linux, Docker images, and container orchestration tools like Kubernetes. This guide will build on working with containers, Docker Engine, and Kubernetes where a developer can learn to develop and share containerized applications.